Abstract

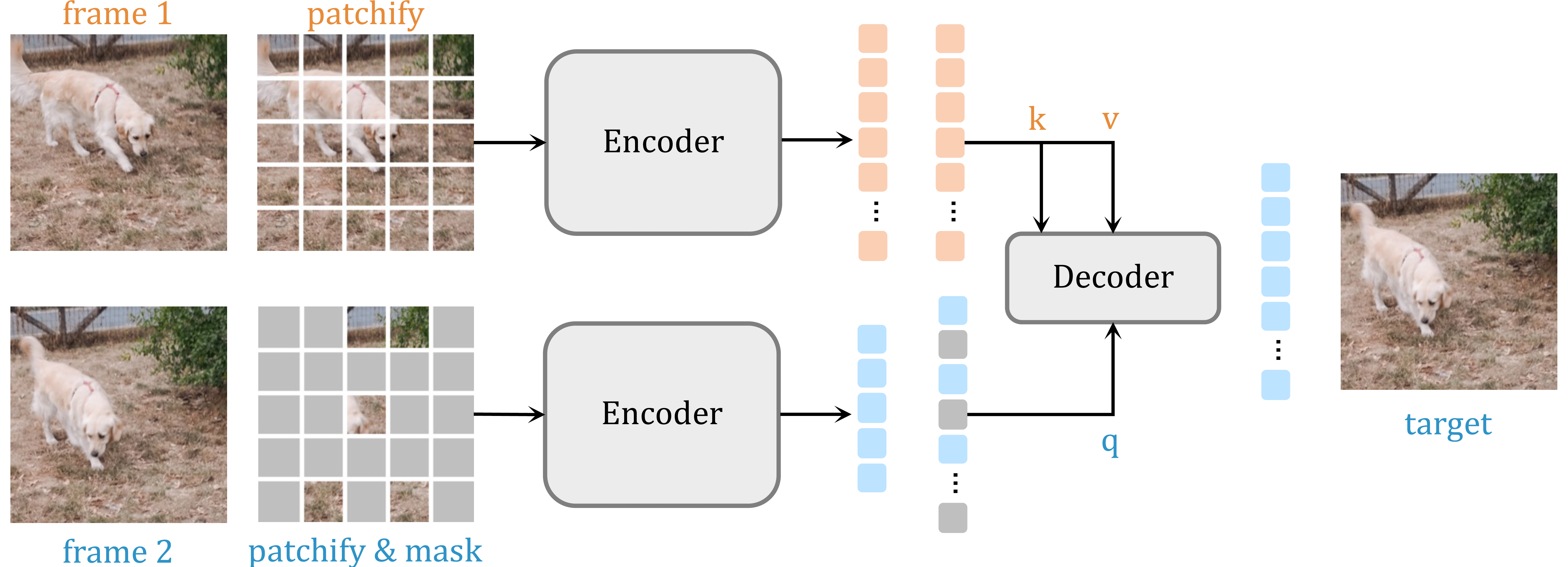

Establishing correspondence between images or scenes is a significant challenge in computer vision, especially given occlusions, viewpoint changes, and varying object appearances. In this paper, we present Siamese Masked Autoencoders (SiamMAE), a simple extension of Masked Autoencoders (MAE) for learning visual correspondence from videos. SiamMAE operates on pairs of randomly sampled video frames and asymmetrically masks them. These frames are processed independently by an encoder network, and a decoder composed of a sequence of cross-attention layers is tasked with predicting the missing patches in the future frame. By masking a large fraction (95%) of patches in the future frame while leaving the past frame unchanged, SiamMAE encourages the network to focus on object motion and learn object-centric representations. Despite its conceptual simplicity, features learned via SiamMAE outperform state-of-the-art self-supervised methods on video object segmentation, pose keypoint propagation, and semantic part propagation tasks. SiamMAE achieves competitive results without relying on data augmentation, handcrafted tracking-based pretext tasks, or other techniques to prevent representational collapse.

Qualitative results

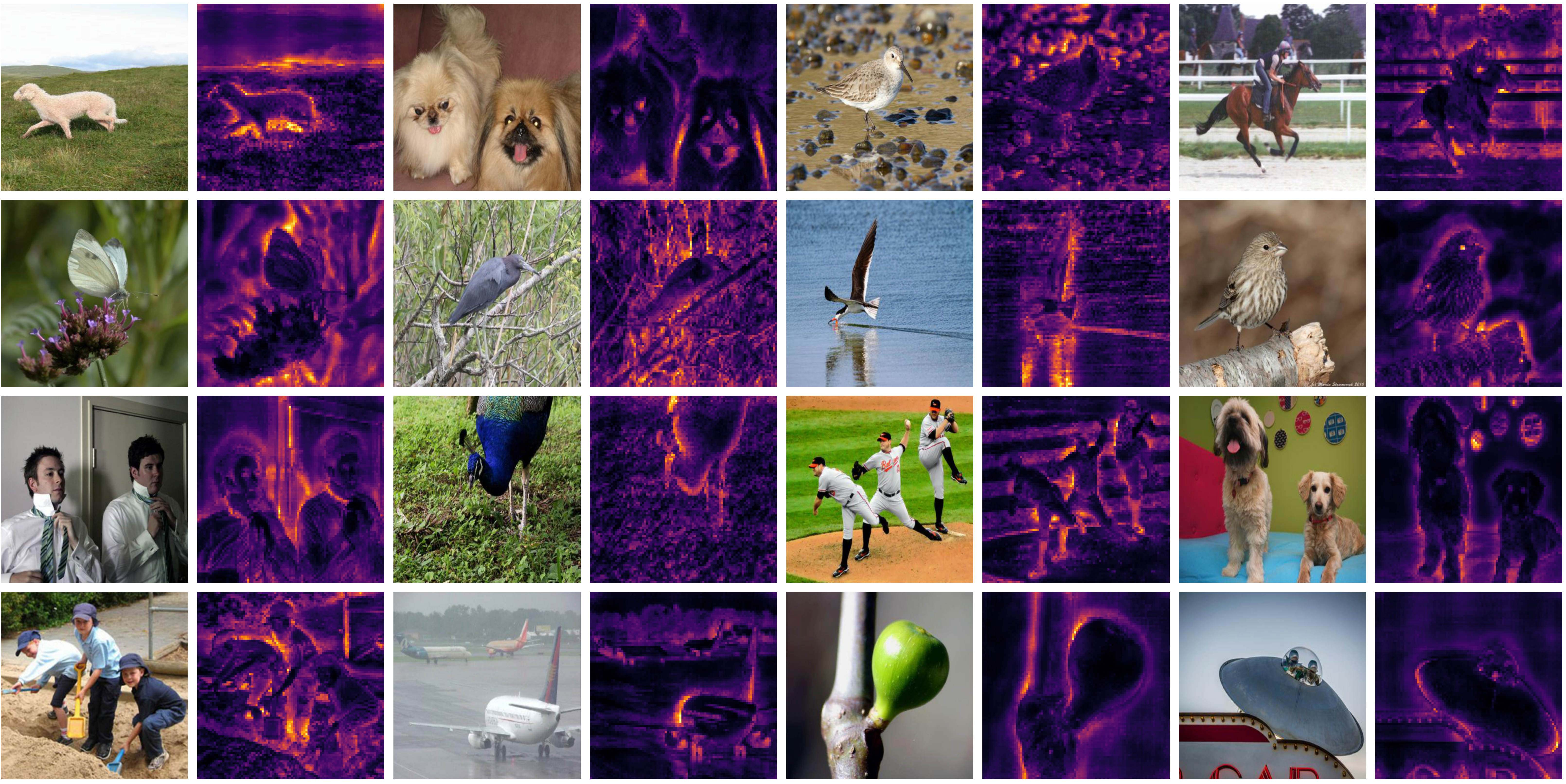

Attention Map Visualization

We visualize the self-attention map of the ViT-S/8 model. We use the [CLS] token as the query and visualize the attention of a subset of heads from the last layer for 720p videos from internet and DAVIS dataset. In addition, we also show attention of a single head from the last layer with 720p images from ImageNet. We find that the model attends to the object boundaries. Unlike contrastive methods, there is no explicit loss function acting on the [CLS] token. These self-attention maps suggest that the model has learned the notion of object boundaries from object motion in videos.

Internet Videos

DAVIS Videos

ImageNet Images